No, Conscientiousness Hasn't Collapsed Among Young People in Recent Years

How the Financial Times created a misleading meme and contributed to the firehose of nonsense on screen panic

Last week the Financial Times (FT) released a report decrying “The troubling decline in conscientiousness”. The subtitle made the tone of the argument clear: “A critical life skill is fading out — and especially fast among young adults.” But is it? Or did the FT engage in some statistical sorcery to make a minor trend look more dramatic than it was?

The FT article claims that conscientiousness…one of the Big5 personality variables…is on a steep decline, particularly among the young1. They based this on a database called the Understanding American Study (UAS), which is an internet-based panel study (more on that bit in a moment). The FT article also claimed inspiration from a 2022 study by Angelina Sutin and colleagues using the UAS dataset that found only very modest changes in conscientiousness and other personality variables during the early years of covid19.

The thing about the Big5 personality variables is that they have a significant genetic component to them and are generally considered stable over long time frames. Some personality change can occur but, even over long intervals, these tend to be modest. I asked a couple of personality scientists, Robert McCrae and Brent Donnellan about the FT claims. Both were skeptical. To quote Dr. McCrae: “I am very skeptical.” Dr. McCrae passed my questions to Dr. Sutin who wrote “It would be nice to see the change replicated before making too much of it.”

Too late for that! The FT promoted their claims with little restraint and their graphs showing dramatic change were passed all over the internet. The FT also peppered their essay with causal assertions about what might have caused any change, despite not even providing correlational data. The FT essay reads a lot like an old man waving his cane at kids crossing his lawn…everything about modern society, from processed foods to streaming services are bad…but the main culprit that gets mentioned is predictable: smartphones, social media and other screens.

Does the FT article have any actual data on screen time to correlate with conscientiousness? No. Of course, even then correlation doesn’t equal correlation, but it bears repeating: the FT engaged in causal attributions without even bothering with correlational data.

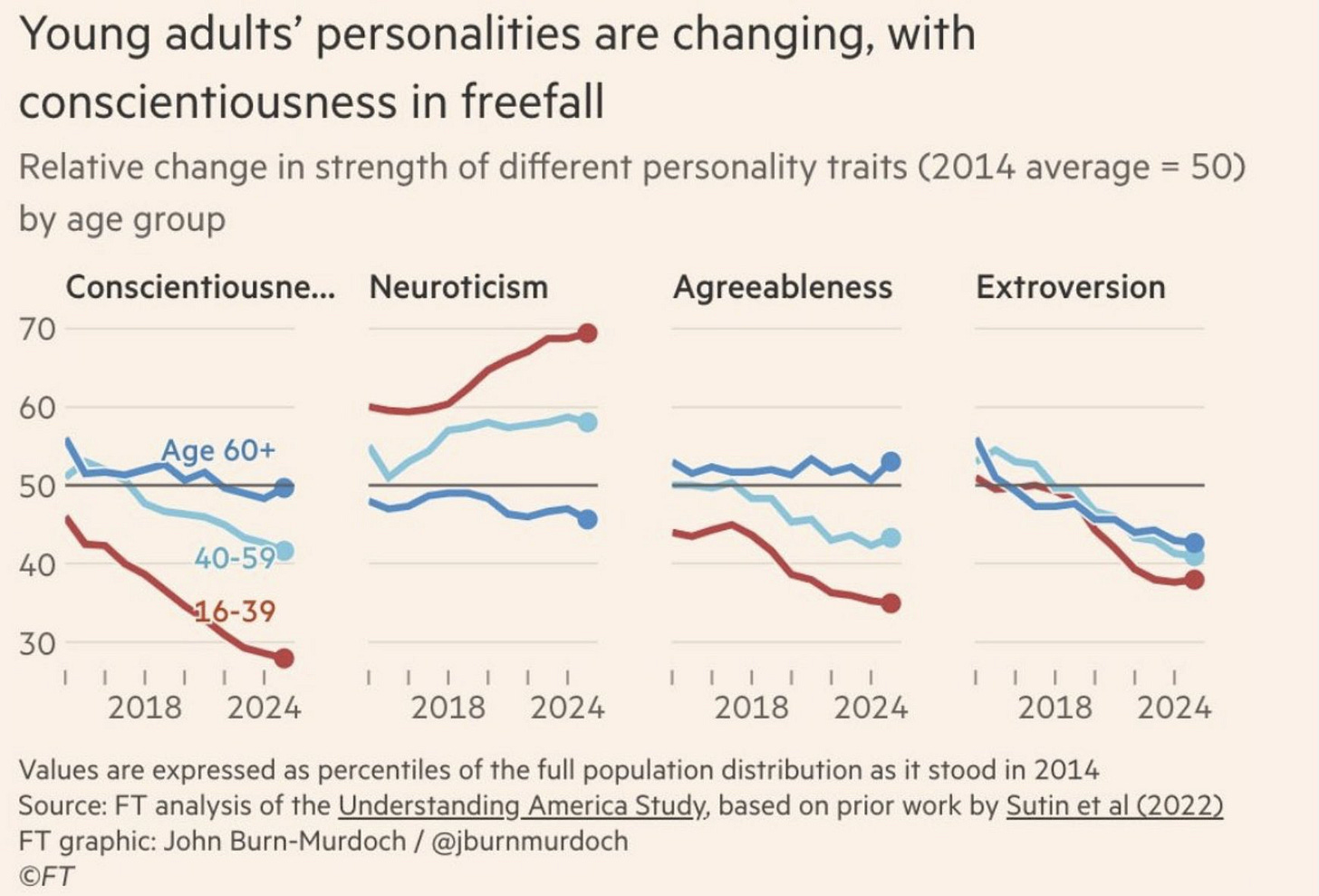

But the question also rises: were the FT’s graphs of the change a fair representation of what actually happened? Here are the graphs the FT presented and which went viral:

Those do look bad! The graphs that went viral appear to show dramatic changes. But in an exchange on X, the article author John Burn-Murdoch acknowledged engaging in a lot of data manipulation to get those graphs from the raw form into the form published in the FT. He even acknowledges that engaging in different types of data manipulation could make the graphs look widely variable in outcome, claiming some outcomes were even more dramatic looking than this. That’s…not great though is it? He stated he sought to provide a version that was less dramatic, but I’m skeptical of that given all his claims of "troubling decline", "sustained erosion" "collapsing conscientiousness" and “freefall.” Not to mention that, of course, the graphs he published appear to show a far more dramatic decline in conscientiousness than the raw data.

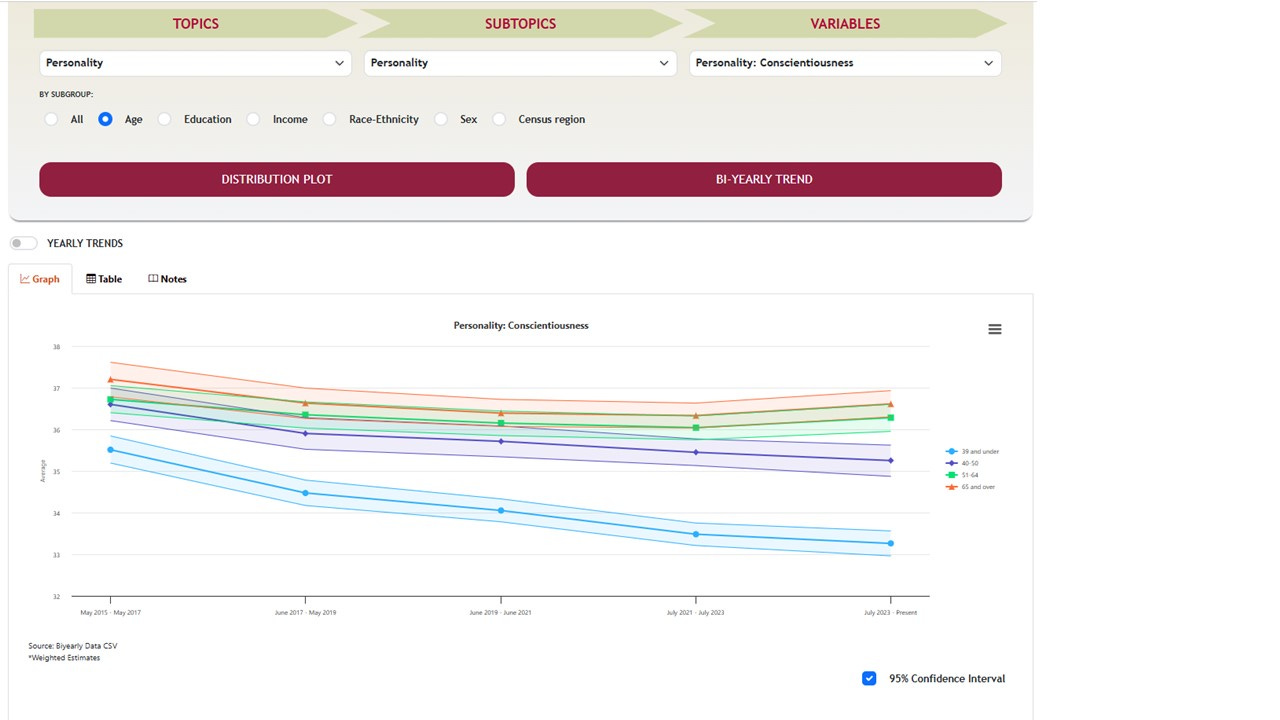

I accessed the UAS data myself. Using their interactive portal, it’s clear that the decline in conscientiousness is far more modest than Mr. Burn-Murdoch purported. The youngest age group, 39 and younger, which was the focus of all the fuss, shows mean conscientiousness score of 35.51 out of a range of 9-45 in their 2015-2017 data, the first data point available. That’s actually pretty high. By the latest data point, 2023-present, it had dropped to 33.26…actually still pretty high2. That’s a drop of a mere 2.28 points.

Even the UAS graphs have a truncated Y-axis making the change seem a bit more dramatic than it is, a common graphics trick3.

We can further conceptualize this in terms of effect size. Dr. Sutin in her 2022 study reported standard deviations (the typical amount by which the average person varies away from the mean score) for conscientiousness of between 5.43 and 5.83. For simplicity, I’ll use the middle point of that: 5.63. Dividing the difference between the start and end point score (2.28) by that standard deviation, we can an effect size of d = .40 (about the same as r = .20)4. Basically this means that time, as measured by those 7-8 years, accounts for about 4% of the variance in conscientiousness. That’s a very small effect, not a “collapsing” or “troubling” change.

Effects for other Big5 personality variables were even more modest, which is why I suspect all the focus was on conscientiousness. For neuroticism, the anxiety and depression proneness we were all worried about until last week, young adults scores went from 22.12 to 24.03 in the same time frame, all pretty low scores. That’s a difference of 1.915. Sutin reported higher standard deviations for neuroticism and I’m going to use her mean standard deviation of 6.55. This means the effect size for neuroticism would be d = .29 (about r = .145) with time explaining only about 2% of the variance in neuroticism. That’s low enough to be without any real practical significance.

It’s worth noting the shifting of goalposts here…changes in neuroticism were near zero, so we shift to talking about conscientiousness (though those outcomes were only slightly less modest). This goalpost shifting is common to moral panics.

How to explain the discrepancy between the actual data and the transformed data Mr. Burn-Murdoch presented? I did reach out to him about that. He did respond and I really do appreciate that6. He reiterated many of the same points as on the X thread about the raw data being confusing to general audiences and the need to transform them to some more (to his argument) understandable form. However, I remain unconvinced by these explanations. They sound like the kind of data torturing that should make people wary of statistics7. And the resultant article is hardly a centerpiece of modest data analysis.

Mr. Burn-Murdoch explained that he believed that presenting the raw data would be confusing for general audiences. I don’t know why that would be. I think interpreting the raw data graph is pretty obvious. As for the effect size, even if one wished to skip the inside baseball I included above, one could simply say “these are very small changes.”

My suspicions is that doesn’t make for a great headline though. “The troubling decline in conscientiousness” is a lot more compelling than “A very small decline in conscientiousness”. My guess is the latter headline would have gotten not even a faction of the virality that the former did and FT absolutely knew this. Of course, then you need nifty graphs to match.

To be clear, I don’t think Mr. Burn-Murdoch meant anything cynical…he seemed sincere and pleasant in our exchange. However, people can easily convince themselves that all kinds of statistical mumbo-jumbo is warranted in pursuit of a good cause. That was the root of psychology’s replication crisis after all…in the main, not people deliberately being fraudulent, but badly trained people who are inclined to convince themselves that whatever benefits themselves happens to also be the morally right thing to do. We’re all prone to this, and I hardly excuse myself from the tendency. But it means we should treat alarming claims about moral decay with a significant grain of salt8.

So do I think FT deliberately fudged their data: no. Do I think they tortured it until they presented it in a way that was reckless and misleading: absolutely.

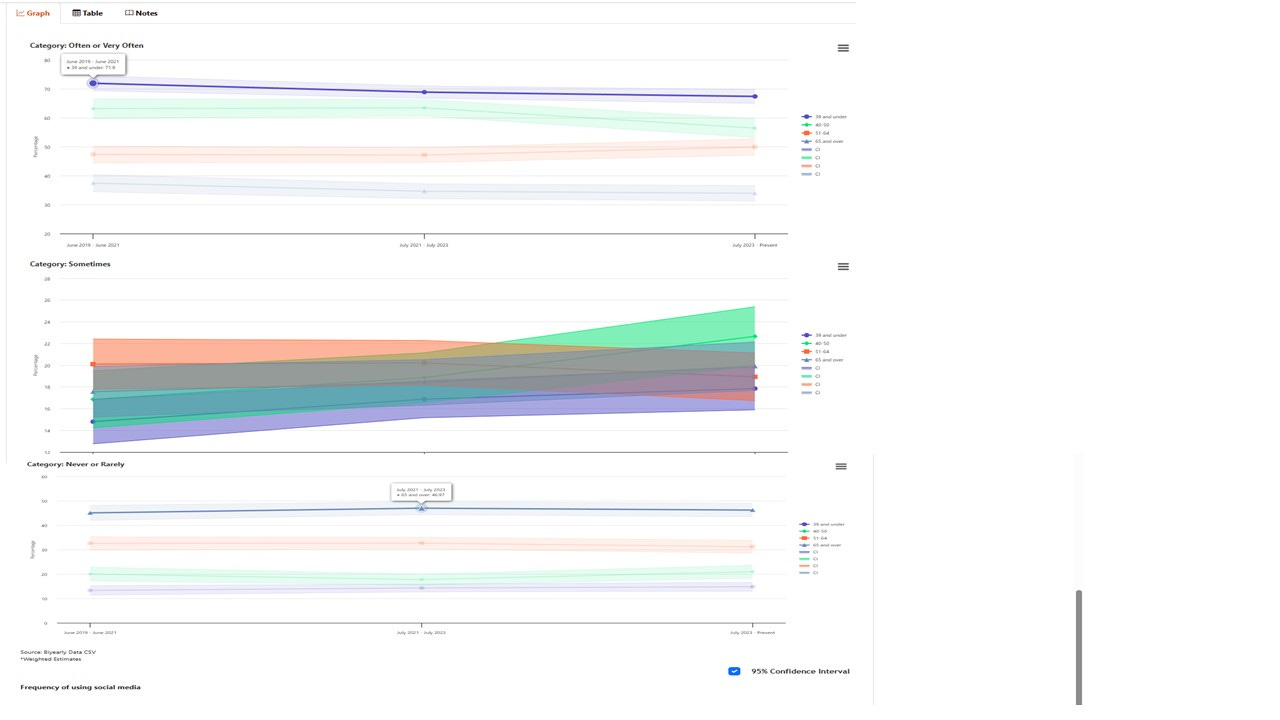

I’ll repeat that FT made causal claims about technology (among other things wrong with the world today) without even having correlational data. I realize, by saying this, I’m inviting the kinds of Nicholas Cage/Swimming Pool Death ecological fallacies about social media and youth mental trends that have so fueled this current moral panic. So, I did look at the UAS database to see what they had on social media.

Some obvious caveats…the question on social media use is pretty crude and they only started using it in 2019, which is hardly enough time for any kind of time series analysis. However, social media use data looks pretty flat. So not likely much correlation there.

More to the point though…actual correlational research studies generally find little evidence for correlations between social media time and youth mental health, particularly when other variables such as preexisting mental health issues, family environment, school environment, and genetics are controlled. The FT could have noted this, or even simply said that scholarly opinions on this topic are divided. But they did not and charged ahead with spurious causal claims such as: “While a full explanation of these shifts requires thorough investigation, and there will be many factors at work, smartphones and streaming services seem likely culprits.”9

In our exchange, Mr. Burn-Murdoch did mention one other thing I thought was interesting. He expressed frustration at moral panic theory, implying current concerns about screens couldn’t be a moral panic because many people were worried about themselves being affected by technology, not just old people worried about kids. This is a misimpression of moral panic theory. In fact there are plenty of moral panics where people worried about impacts on themselves.

There is of course no small dose of old people worrying about kids in the current panic. But panics over covid19 vaccines, Y2K, locomotives, microwaves, porn, 5G…not to mention the bewitching concerns at the heart of the most famous moral panic…The Salem Witch Trials…tended to be self-focused. Many moral panics are old people worrying about kids10, but they don’t have to be and usually even when they are, it’s not too hard to find at least a few kids who will claim tech made ‘em do it. Everybody likes to shift blame away from themselves onto other things and younger generations are no exception.

It’s worth noting the UAS dataset, while remarkable in many ways, has some pretty obvious limitations. Not all it’s questions are great, like the social media ones I mentioned above. But also it’s internet based…meaning it can’t be generalized to the general public (like FT did). It can’t tell us how personality in America has changed…all it can tell us is about how personality changed among people who use the internet often enough to fill out internet surveys. But who uses the internet often can differ quite a bit from year-to-year…particularly during a time frame that includes the covid19 pandemic when, for a bit, everyone was home and online, and then that gradually stopped. So there’s an obvious historical effect confound to this data. My colleague, Brent Donnellan raised similar thoughts: “The personality changes during the pandemic stuff always worried me as somewhat artifactual given disruptions in everyday lives and social norms. I think folks really need to test invariance of the measures as I think items could have drifted in relevance (e.g., content related to I go to parties or I get along with others change when people are working/studying remotely).”

Fighting the reams of bad information coming out about technology, screens, AI, etc., is kind of becoming its own full-time job of whack-a-mole11. It’s just a shame an esteemed outlet like FT contributed to it with tortured data and even more tortured causal claims.

Which is defined as 16-39 in the UAS dataset, so not necessarily THAT young.

This is also the question of the clinical value of all this. If scores go up or down but stay within the normal range…who cares, really? Let’s say you give people a test of schizophrenia with a range of 0 to 100, with scores above, say 70 possibly indicating schizophrenia. If we find that people who listen to classical music have a mean of 10 on this schizophrenia, well within normal, but people who enjoy Pink Floyd score a mean of 20, we can’t conclude from that the Pink Floyd fans have more schizophrenia since both means are still well within the normal range. We’d need to know how many people got elevated over the clinically significant range, and a comparison of means doesn’t really tell us that.

It’s very common when one wants to kind of zero in on a trend, so I’m not in any way accusing UAS of any shenanigans here. Just noting readers to be wary of that Y-axis (the left axis). Always be wary of the Y-axis…nothing lies like a Y-axis!

These are all back of the envelope calculations, to be fair, but I suspect I’m not too far off.

Which, again, tells us nothing about, say, how many people developed mental health disorders.

I hate how these things can become so personal so fwiw let me say: I am sure Mr. Burns-Murdoch meant well and is sincere. I haven’t the slightest interest in any kind of feud. This is about data, not about the author.

There’s a reason “There are three kinds of lies: Lies, damned lies and statistics” is a common quote, often attributed albeit apocryphally attributed to Mark Twain and/or Benjamin Disraeli.

And some version of the “kids today are less conscientious than in our day” argument has literally been around since the ancient Athenians.

Typically if something requires “thorough investigation”, one should do said thorough investigation before coming to and proclaiming a conclusion. Besides “thorough investigation” has already happened and largely cleared technology as the culprit for any modern woes. Just a lot of people don’t want to hear that.

Or, probably more accurately, not liking kids.

I’m so exhausted, y’all. XD